While hreflang is a fairly simple concept, there’s plenty of issues that can be encountered in the implementation.

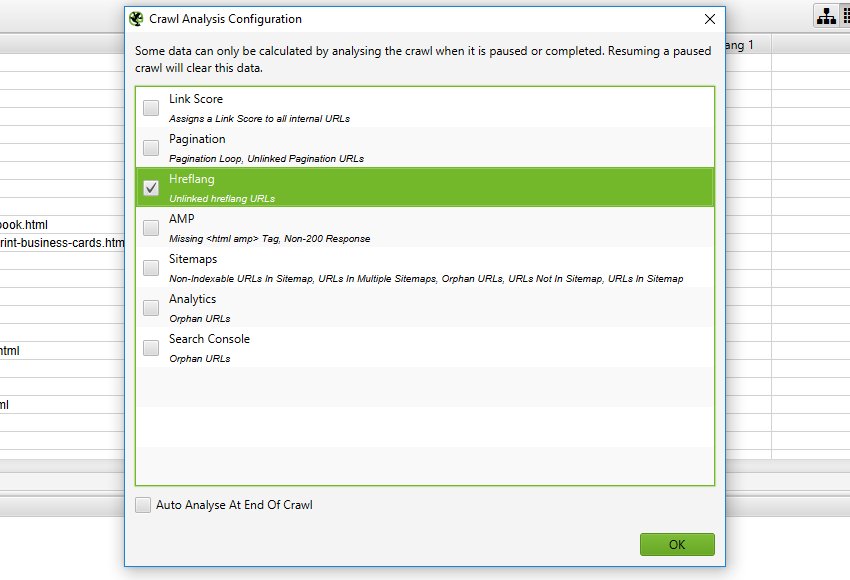

While users have historically used custom extraction to collect hreflang, by default these can now be viewed under the ‘hreflang’ tab, with filters for common issues. They are also extracted from Sitemaps when crawled in list mode. The SEO Spider now extracts, crawls and reports on hreflang attributes delivered by HTML link element and HTTP Header. 4) hreflang Attributesįirst of all, apologies, this one has been a long time coming. You can read more about testing robots.txt in our user guide. Please note – The changes you make to the robots.txt within the SEO Spider, do not impact your live robots.txt uploaded to your server.

SCREAMING FROG SEO SPIDER AT SCREAMINGFROG.CO.UK. UPDATE

We don’t have any problem parsing it and believe Google should really update their behaviour to make up for potential mistakes. According to the spec, it invalidates the line – however, this will generally only ever be due to user error. We considered including a check for a double UTF-8 byte order mark (BOM), which can be a problem for Google.

The custom robots.txt uses the selected user-agent in the configuration and works well with the new fetch and render feature, where you can test how a web page might render with blocked resources. The new feature allows you to add multiple robots.txt at subdomain level, test directives in the SEO Spider and view URLs which are blocked or allowed.ĭuring a crawl you can filter blocked URLs based upon the custom robots.txt (‘Response Codes > Blocked by robots.txt’) and see the matches robots.txt directive line.Ĭustom robots.txt is a useful alternative if you’re uncomfortable using the regex exclude feature, or if you’d just prefer to use robots.txt directives to control a crawl. You can download, edit and test a site’s robots.txt using the new custom robots.txt feature under ‘Configuration > robots.txt > Custom’. The pages this impacts and the individual blocked resources can also be exported in bulk via the ‘Bulk Export > Response Codes > Blocked Resource Inlinks’ report. The blocked resources can also be seen under ‘Response Codes > Blocked Resource’ tab and filter. The SEO Spider now reports on blocked resources, which can be seen individually for each page within the ‘Rendered Page’ tab, adjacent to the rendered screen shots. Viewing the rendered page is vital when analysing what a modern search bot is able to see and is particularly useful when performing a review in staging, where you can’t rely on Google’s own Fetch & Render in Search Console. With Google’s much discussed mobile first index, this allows you to set the user-agent and viewport as Googlebot Smartphone and see exactly how every page renders on mobile. This feature is enabled by default when using the new JavaScript rendering functionality and allows you to set the AJAX timeout and viewport size to view and test various scenarios. This populates the lower window pane when selecting URLs in the top window. You can now view the rendered page the SEO Spider crawled in the new ‘Rendered Page’ tab which dynamically appears at the bottom of the user interface when crawling in JavaScript rendering mode. 1) ‘Fetch & Render’ (Rendered Screen Shots) Since the release of rendered crawling in version 6.0, our development team have been busy working on more new and exciting features.

I’m delighted to announce Screaming Frog SEO Spider version 7.0, codenamed internally as ‘Spiderman’.

0 kommentar(er)

0 kommentar(er)